Prompt engineering, the ability to effectively communicate with large language models (LLMs), in the rapidly evolving landscape of artificial intelligence, has become an invaluable skill. It’s not just about asking questions or inputting commands; it’s about crafting prompts in a way that unlocks the full potential of these sophisticated models. By understanding and applying the principles of prompt engineering, users can significantly enhance the precision, relevance, and depth of the responses they receive.

This comprehensive guide is designed for anyone looking to deepen their understanding of prompt engineering — from AI enthusiasts to professionals seeking to leverage LLMs in their work. We’ll start by exploring advanced prompting techniques that facilitate more complex tasks and boost the reliability and performance of LLMs. Next, we’ll delve into the insights from OpenAI’s own guide, offering a condensed yet informative summary of six key strategies to optimize your interactions with large language models. Next, we’ll enrich our exploration with tips and insights drawn from the latest scientific research in prompt engineering. Finishing, we will introduce the concept of Prompt Hacking, an important concept to ensure the security of LLM’s projects. So, let’s embark on this article and unlock the secrets of effective prompt engineering.

Prompting Techniques

Here we will describe advanced prompting engineering techniques to achieve more complex tasks and improve reliability and performance of LLMs. In the Prompt Engineering Guide there is a list of several techniques; below we will cover the most important ones. When you see about quantity-shot Prompting, it refers to how many examples are available for the Large Language Model. Zero-shot, 1-shot, 3-shot, and more. This quantity of examples directly impacts the LLM answer quality!

Chain-of-Thought Prompting (CoT): enables complex reasoning capabilities through intermediate reasoning steps, the popular Zero-CoT example is “Let’s think step by step”. This method outperforms standard prompting for various large language models; for example, the PaLM model significantly enhanced performance in the GSM8K benchmark, improving it from 17.9% to 58.1%.

CoT is the basis for other new methods such as Self-Consistency (sample diverse reasoning paths through few-shot CoT, using the generations to select the most consistent answer) and Active-Prompt (adapt LLMs to different task-specific example prompts annotated with human-designed CoT reasoning).

Generate Knowledge Prompting: This method reveals the limitations of LLMs to perform tasks that require more knowledge about the world. Before answering a question, we generate knowledge about this question and integrate it into the prompt to improve performance. To address this limitation, we can also use advanced techniques such as RAG (Retrieval Augmented Generation) and Fine-tuning, which we will cover in next articles! Follow us to keep updated!

ReAct: a method where LLMs create action plans and make decisions in a step-by-step process. First, they generate reasoning traces, which are like thought processes that help the model develop and adjust its plans. Then, in the action step, the model interacts with external sources like databases to gather extra information. This helps the model provide more accurate and fact-based responses. ReAct is a popular approach in building AI agents, and it’s a great topic we’ll explore more in future articles.

Prompt Chaining: involves breaking down complex tasks into smaller, manageable subtasks. In this method, a large language model (LLM) is given a prompt related to a specific subtask. The response from the LLM is then used as input for the next prompt in the sequence. This creates a series of linked prompts, or a “chain,” allowing the LLM to tackle each part of the task step by step. This technique is particularly useful in developing AI applications like Conversational RAG Chatbots, where frameworks like Langchain and LlamaIndex orchestrate the process.

Tree of Thoughts (ToT): a popular method used to solve complex tasks, which enables LLMs to make decisions by considering multiple paths and evaluating choices to decide the next course of action. Here is an example of ToT in a single prompt.

Imagine three different experts are answering this question. All experts will write down 1 step of their thinking, then share it with the group. Then all experts will go on to the next step. If any expert realizes they’re wrong at any point then they leave. The question is {question}

OpenAI Guide Summary

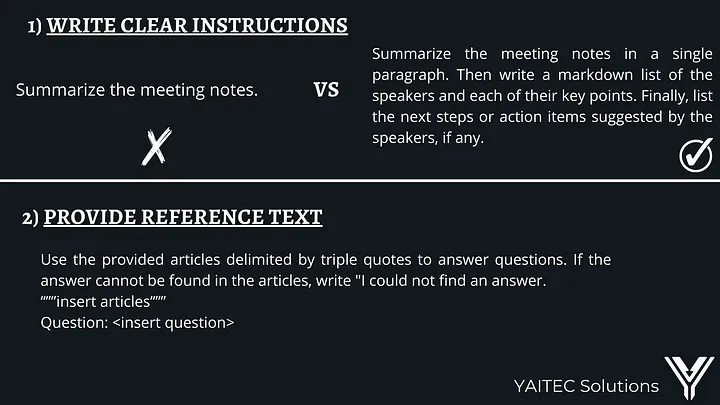

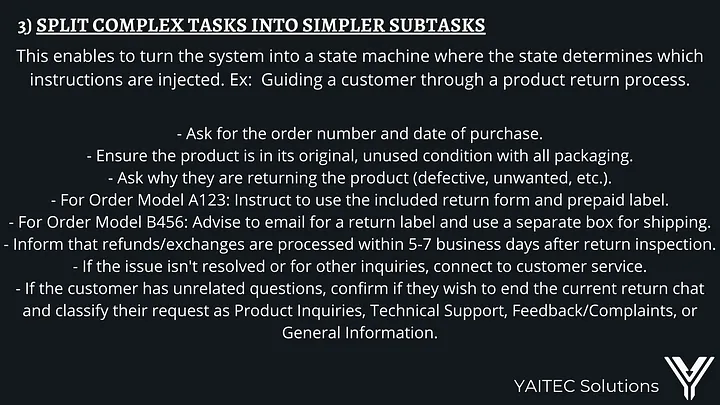

OpenAI team made a guide that shares strategies and tactics for getting better results from large language models. Below are Six strategies for getting better results from OpenAI summarized.

More Validated Tips and Examples

Based on Principled Instructions Are All You Need for Questioning LLaMA-1/2, GPT-3.5/4, we will describe some tips on how to improve the querying and prompting practices when it comes to Large Language Models. In the cited article, they use 26 topics; here we compiled 10 practical and relevant principles. So, let’s go to the principles themselves:

There is no need to be polite with LLMs

You might be asking yourself, why? Well, the point is that it does not help the LLM to get the conversation field, so you are expending tokens unnecessarily. DO NOT USE “please,” “may you,” “thank you,” etc.Integrate the audience in your prompt

This is important because your answers can be adjusted based on the base of your audience. For example, if your text is intended to be delivered to a child, it should be completely different from a text delivered to a scientist with a PhD.So, to do that YOU SHOULD ADD: “[…] using simple English like you are explaining to a 5-year-old person,” “[…] using scientific terms in the field of physics explain what wormholes like you are explaining to a PhD scientist.”

Break down complex tasks into a sequence of simpler prompts in an interactive conversation.

Let’s say you want to get information about a trip. Instead of passing all the info to the model, you might split it into different questions intending to make the model comprehend better the context through the chat history. This concept is more powerful using Orchestration Frameworks such as Langchain, which we will cover in next articles. Follow us to keep updated!Here are examples of how to split the prompts:

- “What is a good topic for a research paper in the field of environmental science?”

- Can you provide some recent studies or data related to this topic?

- What is the proper structure for a research paper?

- Can you help me create an outline based on this structure?

- Can you help me write an introduction for my research paper?

- “What is a good topic for a research paper in the field of environmental science?”

Employ affirmative directives such as ‘do,’ while steering clear of negative language like ‘don’t’.

This refers to the process of being clear in your prompts. You should always focus on what you want from your model. Here is a practical example:- Make a guide on how to improve my writing skills?

Add “I’m going to tip $xxx for a better solution!”

We did not find scientific reasons for this phenomenon in some LLMs models such as ChatGPT. This trick is useful when you need to get more details during your response. It’s like the LLM puts more effort (details) to get that tip you are offering to it.So at this point we will do a test by ourselves and see if it really works:

Without adding a tip:

import os from openai import OpenAI client = OpenAI( api_key=os.environ.get("OPENAI_API_KEY"), ) chat_completion = client.chat.completions.create( messages=[ { "role": "user", "content": "Give me a definition of transmuxing in a video context. Write in simple English like a 10-year-old child.", } ], model="gpt-4", ) print(chat_completion.choices[0].message.content)Response:

Transmuxing in a video context is like changing the container of a video without changing its content. Just like when you pour your soda from a glass bottle to a plastic bottle, the soda remains the same, but it’s just in a different container now. Similarly, transmuxing changes the format of a video without altering its pictures or sounds.

Adding a tip of $20:

import os from openai import OpenAI client = OpenAI( api_key=os.environ.get("OPENAI_API_KEY"), ) chat_completion = client.chat.completions.create( messages=[ { "role": "user", "content": "Give me a definition of transmuxing in a video context. Write in simple English like a 10-year-old child. I'm going to tip $20 for a better solution!", } ], model="gpt-4", ) print(chat_completion.choices[0].message.content)Response:

Transmuxing is like putting a video into a new bottle without changing anything about the video itself. Imagine you have a special toy box that can hold different types of toys without changing the toys. Transmuxing is like moving your video to a new kind of box (file format) that still keeps the video the same.

Use explicit contexts and instructions

The clearer and more detailed you are, the better the answer you’ll get. For example:- “I need a step-by-step guide for creating a blog from scratch, assuming the user has some knowledge of programming but is new to web development.”

Provide clear examples

Providing examples helps LLMs understand the kind of response you expect. Instead of asking, “Can you write a poem?” try, “Write a poem in the style of Shakespeare about a sunset.”Use context effectively

Make use of the conversation history to keep your LLM informed about the ongoing context. This will help in generating coherent and contextually appropriate responses.Test and iterate

Experiment with different phrasing and approaches to find what works best for your specific needs. This iterative process will help refine your prompt engineering skills.Leverage external tools and frameworks

Utilize existing tools, libraries, and frameworks that can enhance the capabilities of LLMs and streamline your prompt engineering process. Examples include Langchain, LlamaIndex, and others.

Prompt Hacking

Prompt hacking refers to the security and ethical concerns related to the use of large language models. It involves understanding and mitigating risks associated with malicious inputs and unintended consequences. Here are some key aspects of prompt hacking:

Injection Attacks: Large language models can be vulnerable to input that manipulates the model into generating harmful or unintended outputs. Proper validation and sanitization of inputs are essential to prevent such attacks.

Bias and Fairness: Ensuring that LLMs do not propagate or amplify biases present in the training data is crucial. Regular audits and adjustments are necessary to maintain fairness and prevent discriminatory outputs.

Privacy Concerns: LLMs should be designed to respect user privacy and confidentiality. Avoid including sensitive information in prompts and be aware of the data used to train and fine-tune the models.

Ethical Use: Responsible use of LLMs involves adhering to ethical guidelines and considering the potential impacts of the generated content. Establishing clear guidelines and monitoring usage helps prevent misuse.

By addressing these concerns, you can ensure the secure and ethical deployment of large language models in various applications.

Conclusion

Mastering prompt engineering is an ongoing process that involves understanding advanced techniques, leveraging insights from experts, and staying informed about best practices and emerging trends. By applying the strategies and tips outlined in this guide, you can enhance the effectiveness of your interactions with large language models and unlock their full potential. Happy prompting!